Tutorials

1. Getting started with Micc2

Note

These tutorials focus not just on how to use micc2. Rather they describe a workflow for how you cac set up a python project and develop it using best practises, with the help of Micc2.

Micc2 aims at providing a practical interface to the many aspects of managing a Python project: setting up a new project in a standardized way, adding documentation, version control, publishing the code to PyPI, building binary extension modules in C++ or Fortran, dependency management, … For all these aspects there are tools available, yet, with each new project, I found myself struggling to get everything right and looking up the details. Micc2 is an attempt to wrap all the details by providing the user with a standardized yet flexible workflow for managing a Python project. Standardizing is a great way to increase productivity. For many aspects, the tools used by Micc2 are completely hidden from the user, e.g. project setup, adding components, building binary extensions, … For other aspects Micc2 provides just the necessary setup for you to use other tools as you need them. Learning to use the following tools is certainly beneficial:

Git: for version control. Its use is optional but highly recommended. See 5. Version control and version management for some basic git coverage.

Pytest: for (unit) testing. Also optional and also highly recommended.

The basic commands for these tools are covered in these tutorials.

1.1. Creating a project with micc2

Creating a new project with micc2 is simple:

> micc2 create path/to/my-first-project

This creates a new project my-first-project in folder path/to. Note that the

directory path/to/my-first-project must either not exist, or be empty.

Typically, you will create a new project in the current working directory, say: your

workspace, so first cd into your workspace directory:

> cd path/to/workspace

> micc2 create my-first-project --remote=none

[INFO] [ Creating project directory (my-first-project):

[INFO] Python top-level package (my_first_project):

[INFO] [ Creating local git repository

[INFO] ] done.

[WARNING] Creation of remote GitHub repository not requested.

[INFO] ] done.

As the output tells, micc2 has created a new project in directory

my-first-project containing a python package

my_first_project. This is a directory with an __init__.py file,

containing the Pythonvariables, classes and meethods it needs to expose. This

directory and its contents represent the Python module.

> my-first-project # the project directory└── my_first_project # the package directory └── __init__.py # the file where your Python code goes

Note

Next to the package structure - a directory with an __init__.py

filePython also allows for module structure - a mere :file:`my_first_project.py

file - containing the Python variables, classes and meethods it needs to expose.

The*module* structure is essentially a single file and Python-only approach, which

often turns out to be too restrictive. As of v3.0 micc2 only supports the creation of

modules with a packages structure, which allows for adding submodules, command

line interfaces (CLIs), and binary extension modules builtfrom other languages as

C++ and Fortran. Micc2 greatly facilitates adding suchcomponents.

Note that the module name differs slightly from the project name. Dashes are been replaced with underscores and uppercase with lowercase in order to yield a PEP 8 compliant module name. If you want your module name to be unrelated to your project name, check out the 1.1.1. What’s in a name section.

Micc2 automatically creates a local git repository for our project (provided the

git command is available) and it commits all the project files that it generated

with commit message ‘And so this begun…’. The --remote=none flag prevents

Micc2 from also creating a remote repository on GitHub. Without that flag, Micc2

would have created a public remote repository on GitHub and pushed that first commit

(tht requires that we have set up Micc2 with a GitHub username and a personal access

token for it as described in First time Micc2 setup. You can also request the remote

repository to be private by specifying --remote=private.

After creating the project, we cd into the project directory. All Micc2

commands detect automatically that they are run from a project directory and

consequently act on the project in the current working directory. E.g.:

> > cd my-first-project

> micc2 info

Project my-first-project located at /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/my-first-project

package: my_first_project

version: 0.0.0

contents:

my_first_project top-level package (source in my_first_project/__init__.py)

As the info subcommand, shows info on a project, is running inside the

my-first-project directory, we get the info on the

my-first-project project.

To apply a Micc2 command to a project that is not in the current working directory see 1.2.1. The project path in Micc2.

Note

Micc2 has a built-in help function: micc2 --help shows the global options, which

appear in front of the subcommand, and lists the subcommands, and micc2 subcommand

--help, prints detailed help for a subcommand.

1.1.1. What’s in a name

The name you choose for your project is not without consequences. Ideally, a project name is:

descriptive,

unique,

short.

Although one might think of even more requirements, such as being easy to type,

satisfying these three is already hard enough. E.g. the name

my_nifty_module may possibly be unique, but it is neither descriptive,

neither short. On the other hand, dot_product is descriptive, reasonably

short, but probably not unique. Even my_dot_product is probably not

unique, and, in addition, confusing to any user that might want to adopt your

my_dot_product. A unique name - or at least a name that has not been taken

before - becomes really important when you want to publish your code for others to use

it (see 7. Publishing your code for details). The standard place to publish Python code is

the Python Package Index, where you find hundreds of

thousands of projects, many of which are really interesting and of high quality. Even

if there are only a few colleagues that you want to share your code with, you make their

life (as well as yours) easier when you publish your my_nifty_module at

PyPI. To install your my_nifty_module they will only need to type:

> python -m pip install my_nifty_module

The name my_nifty_module is not used so far, but nevertheless we recommend to choose a better name.

If you intend to publish your code on PyPI, we recommend that you create your project

with the --publish flag. Micc2 then checks if the name you want to use for your

project is still available on PyPI. If not, it refuses to create the project and asks

you to use another name for your project:

> micc2 create oops --publish

[ERROR]

The name 'oops' is already in use on PyPI.

The project is not created.

You must choose another name if you want to publish your code on PyPI.

As there are indeed hundreds of thousands of Python packages published on PyPI,

finding a good name has become quite hard. Personally, I often use a simple and short

descriptive name, prefixed by my initials, et-, which usually makes the

name unique. E.g et-oops does not exist. This has the additional advantage

that all my published modules are grouped in the alphabetic PyPI listing.

Another point of attention is that although in principle project names can be anything supported by your OS file system, as they are just the name of a directory, Micc2 insists that module and package names comply with the PEP8 module naming rules. Micc2 derives the package (or module) name from the project name as follows:

capitals are replaced by lower-case

hyphens

'-'are replaced by underscores'_'

If the resulting module name is not PEP8 compliant, you get an informative error message:

> micc create 1proj

/bin/sh: micc: command not found

The last line indicates that you can specify an explicit module name, unrelated to the project name. In that case PEP8 compliance is not checked. The responsability is then all yours.

1.2. First steps in project management using Micc2

1.2.1. The project path in Micc2

All micc2 commands accept the global --project-path=<path> parameter.

Global parameters appear before the subcommand name. E.g. the command:

> micc2 --project-path path/to/my_project info

will print the info on the project located at path/to/my_project. This can

conveniently be abbreviated as:

> micc2 -p path/to/my_project info

Even the create command accepts the global --project-path=<path>

parameter:

> micc2 -p path/to/my_project create

will attempt to create project my_project at the specified location. The

command is equivalent to:

> micc2 create path/to/my_project

The default value for the project path is the current working directory. Micc2 commands without an explicitly specified project path will act on the project in the current working directory.

1.2.2. Virtual environments

Virtual environments enable you to set up a Python environment that is isolated from the installed Python on your system and from other virtual environments. In this way you can easily cope with varying dependencies between your Python projects.

For a detailed introduction to virtual environments see Python Virtual Environments: A Primer.

When you are developing or using several Python projects simultaneously, it can become difficult for a single Python environment to satisfy all the dependency requirements of these projects. Dependency conflicts can easily arise. Python promotes and facilitates code reuse and as a consequence Python tools typically depend on tens to hundreds of other modules. If tool-A and tool-B both need module-C, but each requires a different version of it, there is a conflict because it is impossible to install two different versions of the same module in a Python environment. The solution that the Python community has come up with for this problem is the construction of virtual environments, which isolates the dependencies of a single project in a single environment.

For this reason it is recommended to create a virtual environment for every project you start. Here is how that goes:

1.2.2.1. Creating virtual environments

> python -m venv .venv-my-first-project

This creates a directory .venv-my-first-project representing the

virtual environment. The Python version of this virtual environment is the Python

version that was used to create it. Use the tree command to get an overview of its

directory structure:

> tree .venv-my-first-project -L 4

.venv-my-first-project

├── bin

│ ├── Activate.ps1

│ ├── activate

│ ├── activate.csh

│ ├── activate.fish

│ ├── easy_install

│ ├── easy_install-3.8

│ ├── pip

│ ├── pip3

│ ├── pip3.8

│ ├── python -> /Users/etijskens/.pyenv/versions/3.8.5/bin/python

│ └── python3 -> python

├── include

├── lib

│ └── python3.8

│ └── site-packages

│ ├── __pycache__

│ ├── easy_install.py

│ ├── pip

│ ├── pip-20.1.1.dist-info

│ ├── pkg_resources

│ ├── setuptools

│ └── setuptools-47.1.0.dist-info

└── pyvenv.cfg

11 directories, 13 files

As you can see there is a bin, include, and a lib directory.

In the bin directory you find installed commands, like activate,

pip, and the python of the virtual environment. The lib

directory contains the installed site-packages, and the include

directory containes include files of installed site-packages for use with C, C++ or

Fortran.

If the Python version you used to create the virtual environment has pre-installed

packages you can make them available in your virtual environment by adding the

--system-site-packages flag:

> python -m venv .venv-my-first-project --system-site-packages

This is especially useful in HPC environments, where the pre-installed packages typically have a better computational efficiency.

As to where you create these virtual environments there are two common approaches.

One is to create a venvs directory where you put all your virtual

environments. This is practical if you have virtual environments which are common to

several projects. The other one is to have one virtual environment for each project

and locate it in the project directory. Note that if you have several Python versions

on your system you may also create several virtual environments with different

Python versions for a project.

In order to use a virtual environment, you must activate it:

> . .venv-my-first-project/bin/activate

(.venv-my-first-project) >

Note how the prompt has changed as to indicate that the virtual environment is active,

and that current Python is now that of the virtual environment, and the only Python

packages available are the ones installed in it, as well as the system site packages of

the corresponding Python if the virtual environmnet was created with the

--system-site-packages flag. To deactivate the virtual environment, run:

(.venv-my-first-project) > deactivate

>

The prompt has turned back to normal.

So far, the virtual environment is pretty much empty (except for the system site

packages if if was created with the --system-site-packages flag). We must

install the packages that our project needs. Pip does the trick:

> python -m pip install some-needed-package

We must also install the project itself, if it is to be used in the virtual environment.

If the project is not under development, we can just run pip install. Otherwise,

we want the code changes that we make while developing to be instantaneously visible

in the virtual environment. Pip can do editable installs, but only for packages

which provide a setup.py file. Micc2 does not provide setup.py

files for its projects, but it has a simple workaround for editable installs. First

cd into your project directory and activate its virtual environment, then run

the install-e.py script:

> cd path/to/my-first-project

> source .venv-my-first-project/bin/activate

(.venv-my-first-project)> python ~/.micc2/scripts/install-e.py

...

Editable install of my-first-project is ready.

If something is wrong with a virtual environment, you can simply delete it:

> rm -rf .venv-my-first-project

and recreate it.

1.2.3. Modules and scripts

A Python script is a piece of Python code that performs a certain task. A Python module, on the other hand, is a piece of Python code that provides a client code, such as a script, with useful Python classes, functions, objects, and so on, to facilitate the script’s task. To that end client code must import the module.

Python has a mechanism that allows a Python file to behave as both as a script and as

module. Consider this Python file my_first_project.py. as it was created

by Micc2 in the first place. Note that Micc2 always creates project files

containing fully functional examples to demonstrate how things are supposed to be

done.

# -*- coding: utf-8 -*-

"""

Package my_first_project

========================

A hello world example.

"""

__version__ = "0.0.0"

def hello(who="world"):

"""A "Hello world" method.

:param str who: whom to say hello to

:returns: a string

"""

result = f"Hello {who}!"

return result

The module file starts with a file doc-string that describes what the file about and a

__version__ definition and then goes on defining a simple hello

method. A client script script.py can import the

my_first_project.py module to use its hello method:

# file script.py

import my_first_project

print(my_first_project.hello("dear students"))

When executed, this results in printing Hello dear students!

> python script.py

Hello dear students!

Python has an interesting idiom for allowing a module also to behave as a script.

Python defines a __name__ variable for each file it interprets. When the file is

executed as a script, as in python script.py, the

__name__ variable is set to __main__ and when the file is imported the __name__``

variable is set to the module name. By testing the value of the __name__`` variable we

can selectively execute statements depending on whether a Python file is imported or

executed as a script. E.g. below we we added some tests for the hello method:

#...

def hello(who="world"):

"""A "Hello world" method.

:param str who: whom to say hello to

:returns: a string

"""

result = f"Hello {who}!"

return result

if __name__ == "__main__":

assert hello() == "Hello world!

assert hello("students") == "Hello students!

If we now execute my_first_project.py the if __name__ == "__main__":

clause evaluates to True and the two assertions are executed - successfully.

So, adding a if __name__ == "__main__": clause at the end of a module allows it to

behave as a script. This is Python idiom comes in handy for quick testing or debugging a

module. Running the file as a script will execute the test and raise an AssertionError

if it fails. If so, we can run it in debug mode to see what goes wrong.

While this is a very productive way of testing, it is a bit on the quick and dirty side. As the module code and the tests become more involved, the module file will soon become cluttered with test code and a more scalable way to organise your tests is needed. Micc2 has already taken care of this.

1.2.4. Testing your code

Test driven development is a software development process that relies on the repetition of a very short development cycle: requirements are turned into very specific test cases, then the code is improved so that the tests pass. This is opposed to software development that allows code to be added that is not proven to meet requirements. The advantage of this is clear: the shorter the cycle, the smaller the code that is to be searched for bugs. This allows you to produce correct code faster, and in case you are a beginner, also speeds your learning of Python. Please check Ned Batchelder’s very good introduction to testing with pytest.

When Micc2 created project my-first-project, it not only added a

hello method to the module file, it also created a test script for it in the

tests directory of the project directory. The testS for the

my_first_project module is in file

tests/test_my_first_project.py. Let’s take a look at the relevant

section:

# -*- coding: utf-8 -*-

"""Tests for my_first_project package."""

import my_first_project

def test_hello_noargs():

"""Test for my_first_project.hello()."""

s = my_first_project.hello()

assert s=="Hello world!"

def test_hello_me():

"""Test for my_first_project.hello('me')."""

s = my_first_project.hello('me')

assert s=="Hello me!"

The tests/test_my_first_project.py file contains two tests. One for

testing the hello method with a default argument, and one for testing it with

argument 'me'. Tests like this are very useful to ensure that during development

the changes to your code do not break things. There are many Python tools for unit

testing and test driven development. Here, we use Pytest. The tests are

automatically found and executed by running pytest in the project directory:

> pytest tests -v

============================= test session starts ==============================

platform darwin -- Python 3.8.5, pytest-6.2.2, py-1.11.0, pluggy-0.13.1 -- /Users/etijskens/.pyenv/versions/3.8.5/bin/python

cachedir: .pytest_cache

rootdir: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/my-first-project

collecting ... collected 2 items

tests/my_first_project/test_my_first_project.py::test_hello_noargs PASSED [ 50%]

tests/my_first_project/test_my_first_project.py::test_hello_me PASSED [100%]

============================== 2 passed in 0.03s ===============================

Specifying the tests directory ensures that Pytest looks for tests only in

the tests directory. This is usually not necessary, but it avoids that

pytest’s test discovery algorithm discovers test which are not meant to be. The

-v flag increases pytest’s verbosity. The output shows that pytest

discovered the two tests put in place by Micc2 and that they both passed.

Note

Pytest looks for test methods in all test_*.py or *_test.py files

in the current directory and accepts (1) test prefixed methods outside classes

and (2) test prefixed methods inside Test prefixed classes as testmethods

to be executed.

If a test would fail you get a detailed report to help you find the cause of theerror and fix it.

Note

A failing test not necessarily implies that your module is faulty. Test code is also code and therefore can contain errors, too. It is not uncommon that a failing test is caused by a buggy test rather than a buggy method or class.

1.2.4.1. Debugging test code

When the report provided by Pytest does not yield an obvious clue on the cause of the

failing test, you must use debugging and execute the failing test step by step to find

out what is going wrong where. From the viewpoint of Pytest, the files in the

tests directory are modules. Pytest imports them and collects the test

methods, and executes them. Micc2 also makes every test module executable using the

Python if __name__ == "__main__": idiom described above. At the end of every test

file you will find some extra code:

if __name__ == "__main__": # 0

the_test_you_want_to_debug = test_hello_noargs # 1

# 2

print("__main__ running", the_test_you_want_to_debug) # 3

the_test_you_want_to_debug() # 4

print('-*# finished #*-') # 5

On line # 1, the name of the test method we want to debug is aliased as

the_test_you_want_to_debug, c.q. test_hello_noargs. The variable thus becomes an alias for the test method. Line # 3

prints a message with the name of the test method being debugged to assure you that you

are running the test you want. Line # 4 calls the test method, and, finally, line

# 5 prints a message just before quitting, to assure you that the code went well

until the end.

(.venv-my-first-project) > python tests/test_my_first_project.py

__main__ running <function test_hello_noargs at 0x1037337a0> # output of line # 3

-*# finished #*- # output of line # 5

Obviously, you can run this script in a debugger to see what goes wrong where.

1.2.5. Generating documentation

Note

It is not recommended to build documentation in HPC environments.

Documentation is generated almost completely automatically from the source code

using Sphinx. It is extracted from the doc-strings in your code. Doc-strings are the

text between triple double quote pairs in the examples above, e.g. """This is a

doc-string.""". Important doc-strings are:

module doc-strings: at the beginning of the module. Provides an overview of what the module is for.

class doc-strings: right after the

classstatement: explains what the class is for. Usually, the doc-string of the __init__ method is put here as well, as dunder methods (starting and ending with a double underscore) are not automatically considered by Sphinx.method doc-strings: right after a

defstatement, class methods should alsoget a doc-string.

According to pep-0287 the recommended format for Python doc-strings is restructuredText.E.g. a typical method doc-string looks like this:

def hello_world(who='world'):

"""Short (one line) description of the hello_world method.

A detailed description of the hello_world method.

blablabla...

:param str who: an explanation of the who parameter. You should

mention e.g. its default value.

:returns: a description of what hello_world returns (if relevant).

:raises: which exceptions are raised under what conditions.

"""

# here goes your code ...

Here, you can find some more examples.

Thus, if you take good care writing doc-strings, helpful documentation follows automatically.

Micc2 sets up al the necessary components for documentation generation in the

docs directory. To generate documentation in html format, run:

(.venv-my-first-project) > micc2 doc

This will generate documentation in html format in directory

et-dot/docs/_build/html. The default html theme for this is

sphinx_rtd_theme. To view the documentation open the file

et-dot/docs/_build/html/index.html in your favorite browser . Other

formats than html are available, but your might have to install addition packages. To

list all available documentation formats run:

> micc2 doc help

The boilerplate code for documentation generation is in the docs

directory, just as if it were generated manually using the sphinx-quickstart

command. Modifying those files is not recommended, and only rarely needed. Then

there are a number of .rst files in the project directory with capitalized

names:

README.rstis assumed to contain an overview of the project. This file has some boiler plate text, but must essentially be maintained by the authors of the project.AUTHORS.rstlists the contributors to the project.CHANGELOG.rstis supposed to describe the changes that were made to the code from version to version. This file must entirely be maintained byby the authors of the project.API.rstdescribes the classes and methods of the project in detail. This file is automatically updated when new components are added through Micc2_commands.APPS.rstdescribes command line interfaces or apps added to your project. Just asAPI.rstit is automatically updated when new CLIs are added through Micc2 commands. For CLIs the documentation is extracted from thehelpparameters of the command options with the help of Sphinx_click.

Note

The .rst extenstion stands for reStructuredText. It is a simple and

concise approach to text formatting. See

RestructuredText Primer

for an overview.

1.2.6. Version control

Version control is extremely important for any software project with a lifetime of more a day. Micc2 facilitates version control by automatically creating a local git repository in your project directory. If you do not want to use it, you may ignore it or even delete it. If you have setup Micc2 correctly, it can even create remote Github repositories for your project, public as well as private.

Git is a version control system (VCS) that solves many practical problems related to the process software development, independent of whether your are the only developer, or whether there is an entire team working on it from different places in the world. You find more information about how Micc2 cooperates with Git in 5. Version control and version management.

1.3. Miscellaneous

1.3.1. License

When you set up Micc2 you can select the default license for your Micc2 projects. You can choose between:

MIT license

BSD license

ISC license

Apache Software License 2.0

GNU General Public License v3

Not open source

If you’re unsure which license to choose, you can use resources such as GitHub’s Choose a License. You can always overwrite the default chosen when you create a project. The first characters suffice to select the license:

micc2 --software-license=BSD create

The project directory will contain a LICENCE file, a plain text file

describing the license applicable to your project.

1.3.2. The pyproject.toml file

Micc2 maintains a pyproject.toml file in the project directory. This is

the modern way to describe the build system requirements of a project (see

PEP 518 ). Although this

file’s content is generated automatically some understanding of it is useful

(checkout https://poetry.eustace.io/docs/pyproject/).

In Micc2’s predecessor, Micc, Poetry was used extensively for creating virtual

environments and managing a project’s dependencies. However, at the time of

writing, Poetry still fails to create virtual environments which honorthe

--system-site-packages. This causes serious problems on HPC clusters, and

consequently, we do not recommend the use of poetry when your projects have to run on

HPC clusters. As long as this issue remains, we recommend to add a project’s

dependencies manually in the pyproject.toml file, so that when someone

would install your project with Pip, its dependendies are installed with it.

Poetry remains indeed very useful for publishing your project to PyPI from your

desktop or laptop.

The pyproject.toml file is rather human-readable. Most entries are

trivial. There is a section for dependencies [tool.poetry.dependencies],

development dependencies [tool.poetry.dev-dependencies]. You can maintain

these manually. There is also a section for CLIs [tool.poetry.scripts] which is

updated automatically whenever you add a CLI through Micc2.

> cat pyproject.toml

[tool.poetry]

name = "my-first-project"

version = "0.0.0"

description = "My first micc2 project"

authors = ["John Doe <john.doe@example.com>"]

license = "MIT"

readme = 'Readme.rst'

repository = "https://github.com/jdoe/my-first-project"

homepage = "https://github.com/jdoe/my-first-project"

[tool.poetry.dependencies]

python = "^3.7"

[tool.poetry.dev-dependencies]

[tool.poetry.scripts]

[build-system]

requires = ["poetry>=0.12"]

build-backend = "poetry.masonry.api"

2. A first real project

Let’s start with a simple problem: a Python module that computes the scalar product of two arrays, generally referred to as the dot product. Admittedly, this not a very rewarding goal, as there are already many Python packages, e.g. Numpy, that solve this problem in an elegant and efficient way. However, because the dot product is such a simple concept in linear algebra, it allows us to illustrate the usefulness of Python as a language for HPC, as well as the capabilities of Micc2.

First, we set up a new project for this dot project, with the name ET-dot,

ET being my initials (check out 1.1.1. What’s in a name).

> micc2 create ET-dot --remote=none

[INFO] [ Creating project directory (ET-dot):

[INFO] Python top-level package (et_dot):

[INFO] [ Creating local git repository

[INFO] ] done.

[WARNING] Creation of remote GitHub repository not requested.

[INFO] ] done.

We cd into the project directory, so Micc2 knows is as the current project.

> cd ET-dot

Now, open module file et_dot.py in your favourite editor and start coding a

dot product method as below. The example code created by Micc2 can be removed.

# -*- coding: utf-8 -*-

"""

Package et_dot

==============

Python module for computing the dot product of two arrays.

"""

__version__ = "0.0.0"

def dot(a,b):

"""Compute the dot product of *a* and *b*.

:param a: a 1D array.

:param b: a 1D array of the same length as *a*.

:returns: the dot product of *a* and *b*.

:raises: ValueError if ``len(a)!=len(b)``.

"""

n = len(a)

if len(b)!=n:

raise ValueError("dot(a,b) requires len(a)==len(b).")

result = 0

for i in range(n):

result += a[i]*b[i]

return result

We defined a dot() method with an informative doc-string that describes

the parameters, the return value and the kind of exceptions it may raise. If you like,

you can add a if __name__ == '__main__': clause for quick-and-dirty testing or

debugging (see 1.2.3. Modules and scripts). It is a good idea to commit this

implementation to the local git repository:

> git commit -a -m 'implemented dot()'

[main d452a13] implemented dot()

1 file changed, 23 insertions(+), 22 deletions(-)

rewrite et_dot/__init__.py (71%)

(If there was a remote GitHub repository, you could also push that commit

git push, as to enable your colleagues to acces the code as well.)

We can use the dot method in a script as follows:

from et_dot import dot

a = [1,2,3]

b = [4.1,4.2,4.3]

a_dot_b = dot(a,b)

Or we might execute these lines at the Python prompt:

>>> from et_dot import dot

>>> a = [1,2,3]

>>> b = [4.1,4.2,4.3]

>>> a_dot_b = dot(a,b)

>>> expected = 1*4.1 + 2*4.2 +3*4.3

>>> print(f"a_dot_b = {a_dot_b} == {expected}")

a_dot_b = 25.4 == 25.4

Note

This dot product implementation is naive for several reasons:

Python is very slow at executing loops, as compared to Fortran or C++.

The objects we are passing in are plain Python

list`s. A :py:obj:`listis a very powerfull data structure, with array-like properties, but it is not exactly an array. Alistis in fact an array of pointers to Python objects, and therefor list elements can reference anything, not just a numeric value as we would expect from an array. With elements being pointers, looping over the array elements implies non-contiguous memory access, another source of inefficiency.The dot product is a subject of Linear Algebra. Many excellent libraries have been designed for this purpose. Numpy should be your starting point because it is well integrated with many other Python packages. There is also Eigen, a C++ template library for linear algebra that is neatly exposed to Python by pybind11.

However, starting out with a simple and naive implementation is not a bad idea at all. Once it is proven correct, it can serve as reference implementation to validate later improvements.

2.1. Testing the code

In order to prove that our implementation of the dot product is correct, we write some

tests. Open the file tests/et_dot/test_et_dot.py, remove the original

tests put in by micc2, and add a new one like below:

import et_dot

def test_dot_aa():

a = [1,2,3]

expected = 14

result = et_dot.dot(a,a)

assert result==expected

The test test_dot_aa() defines an array with 3 int numbers, and

computes the dot product with itself. The expected result is easily calculated by

hand. Save the file, and run the test, usi ng Pytest as explained in

1.2.4. Testing your code. Pytest will show a line for every test source file an on

each such line a . will appear for every successfull test, and a F for a failing

test. Here is the result:

> pytest tests

============================= test session starts ==============================

platform darwin -- Python 3.8.5, pytest-6.2.2, py-1.11.0, pluggy-0.13.1

rootdir: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot

collected 1 item

tests/et_dot/test_et_dot.py . [100%]

============================== 1 passed in 0.02s ===============================

Great, our test succeeds. If you want some more detail you can add the -v flag.

Pytest always captures the output without showing it. If you need to see it to help you

understand errors, add the -s flag.

We thus have added a single test and verified that it works by running ‘’pytest’’. It is good practise to commit this to our local git repository:

> git commit -a -m 'added test_dot_aa()'

[main 406f097] added test_dot_aa()

1 file changed, 9 insertions(+), 36 deletions(-)

rewrite tests/et_dot/test_et_dot.py (98%)

Obviously, our test tests only one particular case, and, perhaps, other cases might

fail. A clever way of testing is to focus on properties. From mathematics we now that

the dot product is commutative. Let’s add a test for that. Open

test_et_dot.py again and add this code:

import et_dot

import random

def test_dot_commutative():

# create two arrays of length 10 with random float numbers:

a = []

b = []

for _ in range(10):

a.append(random.random())

b.append(random.random())

# test commutativity:

ab = et_dot.dot(a,b)

ba = et_dot.dot(b,a)

assert ab==ba

Note

Focussing on mathematical properties sometimes requires a bit more thought. Our mathematical intuition is based on the properties of real numbers - which, as a matter of fact, have infinite precision. Programming languages, however, use floating point numbers, which have a finite precision. The mathematical properties for floating point numbers are not the same as for real numbers. we’ll come to that later.

> pytest tests -v

============================= test session starts ==============================

platform darwin -- Python 3.8.5, pytest-6.2.2, py-1.11.0, pluggy-0.13.1 -- /Users/etijskens/.pyenv/versions/3.8.5/bin/python

cachedir: .pytest_cache

rootdir: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot

collecting ... collected 2 items

tests/test_et_dot.py::test_dot_commutative PASSED [ 50%]

tests/et_dot/test_et_dot.py::test_dot_aa PASSED [100%]

============================== 2 passed in 0.02s ===============================

The new test passes as well.

Above we used the random() module from Python’s standard library for

generating the random numbers that populate the array. Every time we run the test,

different random numbers will be generated. That makes the test more powerful and

weaker at the same time. By running the test over and over againg new random arrays will

be tested, growing our cofidence inour dot product implementations. Suppose,

however, that all of a sudden thetest fails. What are we going to do? We know that

something is wrong, but we have no means of investigating the source of the error,

because the next time we run the test the arrays will be different again and the test may

succeed again. The test is irreproducible. Fortunateely, that can be fixed by

setting the seed of the random number generator:

def test_dot_commutative():

# Fix the seed for the random number generator of module random.

random.seed(0)

# choose array size

n = 10

# create two arrays of length 10 with zeroes:

a = n*[0]

b = n*[0]

# repeat the test 1000 times:

for _ in range(1000):

for i in range(10):

a[i] = random.random()

b[i] = random.random()

# test commutativity:

ab = et_dot.dot(a,b)

ba = et_dot.dot(b,a)

assert ab==ba

> pytest tests -v

============================= test session starts ==============================

platform darwin -- Python 3.8.5, pytest-6.2.2, py-1.11.0, pluggy-0.13.1 -- /Users/etijskens/.pyenv/versions/3.8.5/bin/python

cachedir: .pytest_cache

rootdir: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot

collecting ... collected 2 items

tests/test_et_dot.py::test_dot_commutative PASSED [ 50%]

tests/et_dot/test_et_dot.py::test_dot_aa PASSED [100%]

============================== 2 passed in 0.02s ===============================

The 1000 tests all pass. If, say test 315 would fail, it would fail every time we run it and the source of error could be investigated.

Another property is that the dot product of an array of ones with another array is the sum of the elements of the other array. Let us add another test for that:

def test_dot_one():

# Fix the seed for the random number generator of module random.

random.seed(0)

# choose array size

n = 10

# create two arrays of length 10 with zeroes, resp. ones:

a = n*[0]

one = n*[1]

# repeat the test 1000 times:

for _ in range(1000):

for i in range(10):

a[i] = random.random()

# test:

aone = et_dot.dot(a,one)

expected = sum(a)

assert aone==expected

> pytest tests -v

============================= test session starts ==============================

platform darwin -- Python 3.8.5, pytest-6.2.2, py-1.11.0, pluggy-0.13.1 -- /Users/etijskens/.pyenv/versions/3.8.5/bin/python

cachedir: .pytest_cache

rootdir: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot

collecting ... collected 3 items

tests/test_et_dot.py::test_dot_commutative PASSED [ 33%]

tests/test_et_dot.py::test_dot_one PASSED [ 66%]

tests/et_dot/test_et_dot.py::test_dot_aa PASSED [100%]

============================== 3 passed in 0.02s ===============================

Success again. We are getting quite confident in the correctness of our implementation. Here is yet another test:

def test_dot_one_2():

a1 = 1.0e16

a = [a1 , 1.0, -a1]

one = [1.0, 1.0, 1.0]

# test:

aone = et_dot.dot(a,one)

expected = 1.0

assert aone == expected

Clearly, it is a special case of the test above. The expected result is the sum of the

elements in a, that is 1.0. Yet it - unexpectedly - fails. Fortunately

pytest produces a readable report about the failure:

> pytest tests -v

============================= test session starts ==============================

platform darwin -- Python 3.8.5, pytest-6.2.2, py-1.11.0, pluggy-0.13.1 -- /Users/etijskens/.pyenv/versions/3.8.5/bin/python

cachedir: .pytest_cache

rootdir: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot

collecting ... collected 4 items

tests/test_et_dot.py::test_dot_commutative PASSED [ 25%]

tests/test_et_dot.py::test_dot_one PASSED [ 50%]

tests/test_et_dot.py::test_dot_one_2 FAILED [ 75%]

tests/et_dot/test_et_dot.py::test_dot_aa PASSED [100%]

=================================== FAILURES ===================================

________________________________ test_dot_one_2 ________________________________

def test_dot_one_2():

a1 = 1.0e16

a = [a1 , 1.0, -a1]

one = [1.0, 1.0, 1.0]

# test:

aone = et_dot.dot(a,one)

expected = 1.0

> assert aone == expected

E assert 0.0 == 1.0

E +0.0

E -1.0

tests/test_et_dot.py:57: AssertionError

=========================== short test summary info ============================

FAILED tests/test_et_dot.py::test_dot_one_2 - assert 0.0 == 1.0

========================= 1 failed, 3 passed in 0.04s ==========================

Mathematically, our expectations about the outcome of the test are certainly

correct. Yet, pytest tells us it found that the result is 0.0 rather than

1.0. What could possibly be wrong? Well our mathematical expectations are based

on our assumption that the elements of a are real numbers. They aren’t. The

elements of a are floating point numbers, which can only represent a finite

number of decimal digits. Double precision numbers, which are the default

floating point type in Python, are typically truncated after 16 decimal digits,

single precision numbers after 8. Observe the consequences of this in the Python

statements below:

>>> print( 1.0 + 1e16 )

1e+16

>>> print( 1e16 + 1.0 )

1e+16

Because 1e16 is a 1 followed by 16 zeroes, adding 1 would alter the 17th

digit,which is, because of the finite precision, not represented. An approximate

result is returned, namely 1e16, which is of by a relative error of only 1e-16.

>>> print( 1e16 + 1.0 - 1e16 )

0.0

>>> print( 1e16 - 1e16 + 1.0 )

1.0

>>> print( 1.0 + 1e16 - 1e16 )

0.0

Although each of these expressions should yield 0.0, if they were real numbers,

the result differs because of the finite precision. Python executes the expressions

from left to right, so they are equivalent to:

>>> 1e16 + 1.0 - 1e16 = ( 1e16 + 1.0 ) - 1e16 = 1e16 - 1e16 = 0.0

>>> 1e16 - 1e16 + 1.0 = ( 1e16 - 1e16 ) + 1.0 = 0.0 + 1.0 = 1.0

>>> 1.0 + 1e16 - 1e16 = ( 1.0 + 1e16 ) - 1e16 = 1e16 - 1e16 = 0.0

There are several lessons to be learned from this:

The test does not fail because our code is wrong, but because our mind is used to reasoning about real number arithmetic, rather than floating point arithmetic rules. As the latter is subject to round-off errors, tests sometimes fail unexpectedly. Note that for comparing floating point numbers the the standard library provides a

math.isclose()method.Another silent assumption by which we can be mislead is in the random numbers. In fact,

random.random()generates pseudo-random numbers in the interval ``[0,1[``, which is quite a bit smaller than]-inf,+inf[. No matter how often we run the test the special case above that fails will never be encountered, which may lead to unwarranted confidence in the code.

So let us fix the failing test using math.isclose() to account for

round-off errors by specifying an relative tolerance and negating the condition for

the original test:

def test_dot_one_2():

a1 = 1.0e16

a = [a1 , 1.0, -a1]

one = [1.0, 1.0, 1.0]

# test:

aone = et_dot.dot(a,one)

expected = 1.0

assert aone != expected

assert math.isclose(result, expected, rel_tol=1e-15)

Another aspect that deserves testing the behavior of the code in exceptional

circumstances. Does it indeed raise ArithmeticError if the arguments

are not of the same length?

import pytest

def test_dot_unequal_length():

a = [1,2]

b = [1,2,3]

with pytest.raises(ArithmeticError):

et_dot.dot(a,b)

Here, pytest.raises() is a context manager that will verify that

ArithmeticError is raise when its body is executed. The test will succeed

if indeed the code raises ArithmeticError and raise

AssertionErrorError if not, causing the test to fail. For an explanation

fo context managers see The Curious Case of Python’s Context Manager.Note

that you can easily make et_dot.dot() raise other exceptions, e.g.

TypeError by passing in arrays of non-numeric types:

>>> import et_dot

>>> et_dot.dot([1,2],[1,'two'])

Traceback (most recent call last):

File "/Users/etijskens/.local/lib/python3.8/site-packages/et_rstor/__init__.py", line 445, in rstor

exec(line)

File "<string>", line 1, in <module>

File "./et_dot/__init__.py", line 22, in dot

result += a[i]*b[i]

TypeError: unsupported operand type(s) for +=: 'int' and 'str'

Note that it is not the product a[i]*b[i] for i=1 that is wreaking havoc, but

the addition of its result to d. Furthermore, Don’t bother the link to where the

error occured in the traceback. It is due to the fact that this course is completely

generated with Python rather than written by hand).

More tests could be devised, but the current tests give us sufficient confidence. The point where you stop testing and move on with the next issue, feature, or project is subject to various considerations, such as confidence, experience, problem understanding, and time pressure. In any case this is a good point to commit changes and additions, increase the version number string, and commit the version bumb as well:

> git add tests #hide#

> git commit -a -m 'dot() tests added'

[main ff3d8ae] dot() tests added

1 file changed, 73 insertions(+)

create mode 100644 tests/test_et_dot.py

> micc2 version -p

[INFO] (ET-dot)> version (0.0.0) -> (0.0.1)

> git commit -a -m 'v0.0.1'

[main 370795b] v0.0.1

2 files changed, 2 insertions(+), 2 deletions(-)

The the micc2 version flag -p is shorthand for --patch, and requests

incrementing the patch (=last) component of the version string, as seen in the

output. The minor component can be incremented with -m or --minor, the major

component with -M or --major.

At this point you might notice that even for a very simple and well defined function, as

the dot product, the amount of test code easily exceeds the amount of tested code by a

factor of 5 or more. This is not at all uncommon. As the tested code here is an isolated

piece of code, you will probably leave it alone as soon as it passes the tests and you are

confident in the solution. If at some point, the dot() would failyou should

add a test that reproduces the error and improve the solution so that it passes the

test.

When constructing software for more complex problems, there will be several interacting components and running the tests after modifying one of the components will help you assure that all components still play well together, and spot problems as soon as possible.

2.2. Improving efficiency

There are times when a just a correct solution to the problem at hand issufficient. If

ET-dot is meant to compute a few dot products of small arrays, the naive

implementation above will probably be sufficient. However, if it is to be used many

times and for large arrays and the user is impatiently waiting for the answer, or if

your computing resources are scarse, a more efficient implementation is needed.

Especially in scientific computing and high performance computing, where compute

tasks may run for days using hundreds or even thousands of of compute nodes and

resources are to be shared with many researchers, using the resources efficiently is

of utmost importance and efficient implementations are therefore indispensable.

However important efficiency may be, it is nevertheless a good strategy for developing a new piece of code, to start out with a simple, even naive implementation, neglecting efficiency considerations totally, instead focussing on correctness. Python has a reputation of being an extremely productive programming language. Once you have proven the correctness of this first version it can serve as a reference solution to verify the correctness of later more efficient implementations. In addition, the analysis of this version can highlight the sources of inefficiency and help you focus your attention to the parts that really need it.

2.2.1. Timing your code

The simplest way to probe the efficiency of your code is to time it: write a simple script and record how long it takes to execute. Here’s a script that computes the dot product of two long arrays of random numbers.

"""File prof/run1.py"""

import random

from et_dot import dot # the dot method is all we need from et_dot

def random_array(n=1000):

"""Create an array with n random numbers in [0,1[."""

# Below we use a list comprehension (a Python idiom for

# creating a list from an iterable object).

a = [random.random() for i in range(n)]

return a

if __name__=='__main__':

a = random_array()

b = random_array()

print(dot(a, b))

print("-*# done #*-")

Executing this script yields:

> python ./prof/run1.py

247.78383180344838

-*# done #*-

Note

Every run of this script yields a slightly different outcome because we did not fix

random.seed(). It will, however, typically be around 250. Since the average

outcome of random.random() is 0.5, so every entry contributes on average

0.5*0.5 = 0.25 and as there are 1000 contributions, that makes on average 250.0.

We are now ready to time our script. There are many ways to achieve this. Here is a

particularly good introduction.

The

et-stopwatch project

takes this a little further. It can be installed in your current Python environment

with pip:

> python -m pip install et-stopwatch

Requirement already satisfied: et-stopwatch in /Users/etijskens/.pyenv/versions/3.8.5/lib/python3.8/site-packages (1.0.5)

Although pip is complaining a bit about not being up to date, the installation is

successful.

To time the script above, modify it as below, using the Stopwatch class

as a context manager:

"""File prof/run1.py"""

import random

from et_dot import dot # the dot method is all we need from et_dot

from et_stopwatch import Stopwatch

def random_array(n=1000):

"""Create an array with n random numbers in [0,1[."""

# Below we use a list comprehension (a Python idiom for

# creating a list from an iterable object).

a = [random.random() for i in range(n)]

return a

if __name__=='__main__':

with Stopwatch(message="init"):

a = random_array()

b = random_array()

with Stopwatch(message="dot "):

a_dot_b = dot(a, b)

print(a_dot_b)

print("-*# done #*-")

and execute it again:

> python ./prof/run1.py

init : 0.000558 s

dot : 0.000182 s

240.2698949846254

-*# done #*-

When the script is executed each with block will print the time it takes

to execute its body. The first with block times the initialisation of

the arrays, and the second times the computation of the dot product. Note that the

initialization of the arrays takes a bit longer than the dot product computation.

Computing random numbers is expensive.

2.2.2. Comparison to Numpy

As said earlier, our implementation of the dot product is rather naive. If you want to

become a good programmer, you should understand that you are probably not the first

researcher in need of a dot product implementation. For most linear algebra

problems, Numpy provides very efficient implementations.Below the modified

run1.py script adds timing results for the Numpy equivalent of our code.

"""File prof/run1.py"""

# ...

import numpy as np

if __name__=='__main__':

with Stopwatch(message="et init"):

a = random_array()

b = random_array()

with Stopwatch(message="et dot "):

dot(a,b)

with Stopwatch(message="np init"):

a = np.random.rand(1000)

b = np.random.rand(1000)

with Stopwatch(message="np dot "):

np.dot(a,b)

print("-*# done #*-")

Its execution yields:

> python ./prof/run1.py

et init : 0.000295 s

et dot : 0.000132 s

np init : 7.3e-05 s

np dot : 9e-06 s

-*# done #*-

Obviously, numpy does significantly better than our naive dot product implementation. It completes the dot product in 7.5% of the time. It is important to understand the reasons for this improvement:

Numpy arrays are contiguous data structures of floating point numbers, unlike Python’s

listwhich we have been using for our arrays, so far. In a Pythonlistobject is in fact a pointer that can point to an arbitrary Python object. The items in a Pythonlistobject may even belong to different types. Contiguous memory access is far more efficient. In addition, the memory footprint of a numpy array is significantly lower that that of a plain Python list.The loop over Numpy arrays is implemented in a low-level programming languange, like C, C++ or Fortran. This allows to make full use of the processors hardware features, such as vectorization and fused multiply-add (FMA).

Note

Note that also the initialisation of the arrays with numpy is almost 6 times faster, for roughly the same reasons.

2.2.3. Conclusion

There are three important generic lessons to be learned from this tutorial:

Always start your projects with a simple and straightforward implementation which can be easily be proven to be correct, even if you know that it will not satisfy your efficiency constraints. You should use it as a reference solution to prove the correctness of later more efficient implementations.

Write test code for proving correctness. Tests must be reproducible, and be run after every code extension or modification to ensure that the changes did not break the existing code.

Time your code to understand which parts are time consuming and which not. Optimize bottlenecks first and do not waste time optimizing code that does not contribute significantly to the total runtime. Optimized code is typically harder to read and may become a maintenance issue.

Before you write any code, in this case our dot product implementation, spend some time searching the internet to see what is already available. Especially in the field of scientific and high performance computing there are many excellent libraries available which are hard to beat. Use your precious time for new stuff. Consider adding new features to an existing codebase, rather than starting from scratch. It will improve your programming skills and gain you time, even though initially your progress may seem slower. It might also give your code more visibility, and more users, because you provide them with and extra feature on top of something they are already used to.

3. Binary extension modules

3.1. Introduction - High Performance Python

Suppose for a moment that our dot product implementation et_dot.dot()

we developed in tutorial-2` is way too slow to be practical for the research project

that needs it, and that we did not have access to fast dot product implementations,

such as numpy.dot(). The major advantage we took from Python is that

coding et_dot.dot() was extremely easy, and even coding the tests

wasn’t too difficult. In this tutorial you are about to discover that coding a highly

efficient replacement for et_dot.dot() is not too difficult either.

There are several approaches for this. Here are a number of highly recommended links

covering them:

Two of the approaches discussed in the High Performance Python series involve rewriting your code in Modern Fortran or C++ and generate a shared library that can be imported in Python just as any Python module. This is exactly the approach taken in important HPC Python modules, such as Numpy, pyTorch and pandas.Such shared libraries are called binary extension modules. Constructing binary extension modules is by far the most scalable and flexible of all current acceleration strategies, as these languages are designed to squeeze the maximum of performance out of a CPU.

However, figuring out how to build such binary extension modules is a bit of a

challenge, especially in the case of C++. This is in fact one of the main reasons why

Micc2 was designed: facilitating the construction of binary extension modules and

enabling the developer to create high performance tools with ease. To that end,

Micc2 can provide boilerplate code for binary extensions as well a practical

wrapper for building the binary extension modules, the micc2 build command.

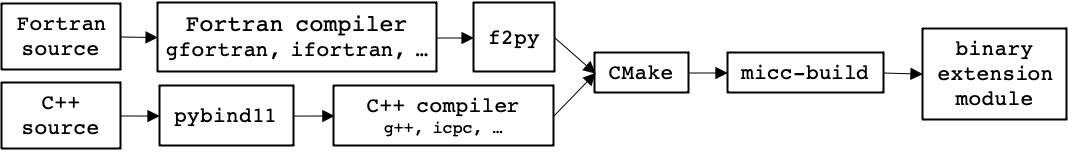

This command uses CMake to pass the build options to the compiler, while bridging the

gap between C++ and Fortran, on one hand and Python on the other hand using pybind11

and f2py. respectively. This is illustrated in the figure below:

There is a difference in how f2py and pybind11 operate. F2py is an executable that inspects the Fortran source code and creates wrappers for the subprograms it finds. These wrappers are C code, compiled and linked with the compiled Fortran code to build the extension module. Thus, f2py needs a Fortran compiler, as well as a C compiler. The Pybind11 approach is conceptually simpler. Pybind11_is a C++ template library that the programmer uses to express the interface between Python and C++. In fact the introspection is done by the programmer, and there is only one compiler round, using a C++ compiler. This gives the programmer more flexibility and control, but also a bit more work.

3.1.1. Choosing between Fortran and C++ for binary extension modules

Here are a number of arguments that you may wish to take into account for choosing the programming language for your binary extension modules:

Fortran is a simpler language than C++.

It is easier to write efficient code in Fortran than C++.

C++ is a general purpose language (as is Python), whereas Fortran is meant for scientific computing. Consequently, C++ is a much more expressive language.

C++ comes with a huge standard library, providing lots of data structures and algorithms that are hard to match in Fortran. If the standard library is not enough, there are also the highly recommended Boost libraries and many other high quality domain specific libraries. There are also domain specific libraries in Fortran, but their count differs by an order of magnitude at least.

With Pybind11 you can almost expose anything from the C++ side to Python, and vice versa, not just functions.

Modern Fortran is (imho) not as good documented as C++. Useful places to look for language features and idioms are:

Fortran: https://www.fortran90.org/

In short, C++ provides much more possibilities, but it is not for the novice. As to my own experience, I discovered that working on projects of moderate complexity I progressed significantly faster using Fortran rather than C++, despite the fact that my knowledge of Fortran is quite limited compared to C++. However, your mileage may vary.

3.2. Adding Binary extensions to a Micc2 project

Adding a binary extension to your current project is simple. To add a binary extension ‘foo’ written in (Modern) Fortran, run:

> micc add foo --f90

and for a C++ binary extension, run:

> micc add bar --cpp

The add subcommand adds a component to your project. It specifies a name, here,

foo, and a flag to specify the kind of the component, --f90 for a Fortran

binary extension module, --cpp for a C++ binary extension module. Other

components are a Python sub-module with module structure (--module), or

package structure --package, and a CLI script (–cli and –clisub).

You can add as many components to your project as you want.

The binary modules are build with the micc2 build command. :

> micc2 build foo

This builds the Fortran binary extension foo. To build all binary

extensions at once, just issue micc2 build.

As Micc2 always creates complete working examples you can build the binary extensions right away and run their tests with pytest

If there are no syntax errors the binary extensions will be built, and you will be able

to import the modules foo and bar in your project scripts and

use their subroutines and functions. Because foo and bar are

submodules of your micc project, you must import them as:

import my_package.foo

import my_package.bar

# call foofun in my_package.foo

my_package.foo.foofun(...)

# call barfun in my_package.bar

my_package.bar.barfun(...)

3.2.1. Build options

Here is an overview of micc2 build options:

> micc2 build --help

Usage: micc2 build [OPTIONS] [MODULE]

Build binary extensions.

:param str module: build a binary extension module. If not specified or

all binary extension modules are built.

Options:

-b, --build-type TEXT build type: any of the standard CMake build types:

Release (default), Debug, RelWithDebInfo, MinSizeRel.

--clean Perform a clean build, removes the build directory

before the build, if there is one. Note that this

option is necessary if the extension's

``CMakeLists.txt`` was modified.

--cleanup Cleanup remove the build directory after a successful

build.

--help Show this message and exit.

3.3. Building binary extension modules from Fortran

So, in order to implement a more efficient dot product, let us add a Fortran binary

extension module with name dotf:

> micc2 add dotf --f90

[INFO] [ Adding f90 submodule dotf to package et_dot.

[INFO] - Fortran source in /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/dotf.f90.

[INFO] - build settings in /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/CMakeLists.txt.

[INFO] - module documentation in /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/dotf.rst (restructuredText format).

[INFO] - Python test code in /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/tests/et_dot/dotf/test_dotf.py.

[INFO] ] done.

The command now runs successfully, and the output tells us where to enter the Fortran

source code, the build settings, the test code and the documentation of the added

module. Everything related to the dotf sub-module is in subdirectory

ET-dot/et_dot/dotf. That directory has a f90_ prefix indicating that

it relates to a Fortran binary extension module. As useal, these files contain

already working example code that you an inspect to learn how things work.

Let’s continue our development of a Fortran version of the dot product. Open file

ET-dot/et_dot/dotf/dotf.f90 in your favorite editor or IDE and replace

the existing example code in the Fortran source file with:

function dot(a,b,n)

! Compute the dot product of a and b

implicit none

real*8 :: dot ! return value

!-----------------------------------------------

! Declare function parameters

integer*4 , intent(in) :: n

real*8 , dimension(n), intent(in) :: a,b

!-----------------------------------------------

! Declare local variables

integer*4 :: i

!-----------------------------------------------'

dot = 0.

do i=1,n

dot = dot + a(i) * b(i)

end do

end function dot

The binary extension module can now be built:

> micc2 build dotf

[INFO] [ Building f90 module 'et_dot/dotf':

[DEBUG] [ > cmake -D PYTHON_EXECUTABLE=/Users/etijskens/.pyenv/versions/3.8.5/bin/python ..

[DEBUG] (stdout)

-- The Fortran compiler identification is GNU 11.2.0

-- Checking whether Fortran compiler has -isysroot

-- Checking whether Fortran compiler has -isysroot - yes

-- Checking whether Fortran compiler supports OSX deployment target flag

-- Checking whether Fortran compiler supports OSX deployment target flag - yes

-- Detecting Fortran compiler ABI info

-- Detecting Fortran compiler ABI info - done

-- Check for working Fortran compiler: /usr/local/bin/gfortran - skipped

-- Checking whether /usr/local/bin/gfortran supports Fortran 90

-- Checking whether /usr/local/bin/gfortran supports Fortran 90 - yes

# Build settings ###################################################################################

CMAKE_Fortran_COMPILER: /usr/local/bin/gfortran

CMAKE_BUILD_TYPE : Release

F2PY_opt : --opt='-O3'

F2PY_arch :

F2PY_f90flags :

F2PY_debug :

F2PY_defines : -DNPY_NO_DEPRECATED_API=NPY_1_7_API_VERSION;-DF2PY_REPORT_ON_ARRAY_COPY=1;-DNDEBUG

F2PY_includes :

F2PY_linkdirs :

F2PY_linklibs :

module name : dotf.cpython-38-darwin.so

module filepath : /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/dotf.cpython-38-darwin.so

source : /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/dotf.f90

python executable : /Users/etijskens/.pyenv/versions/3.8.5/bin/python [version=Python 3.8.5]

f2py executable : /Users/etijskens/.pyenv/versions/3.8.5/bin/f2py [version=2]

####################################################################################################

-- Configuring done

-- Generating done

-- Build files have been written to: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build

[DEBUG] ] done.

[DEBUG] [ > make VERBOSE=1

[DEBUG] (stdout)

/usr/local/Cellar/cmake/3.21.2/bin/cmake -S/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf -B/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build --check-build-system CMakeFiles/Makefile.cmake 0

/usr/local/Cellar/cmake/3.21.2/bin/cmake -E cmake_progress_start /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/CMakeFiles /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build//CMakeFiles/progress.marks

/Library/Developer/CommandLineTools/usr/bin/make -f CMakeFiles/Makefile2 all

/Library/Developer/CommandLineTools/usr/bin/make -f CMakeFiles/dotf.dir/build.make CMakeFiles/dotf.dir/depend

cd /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build && /usr/local/Cellar/cmake/3.21.2/bin/cmake -E cmake_depends "Unix Makefiles" /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/CMakeFiles/dotf.dir/DependInfo.cmake --color=

/Library/Developer/CommandLineTools/usr/bin/make -f CMakeFiles/dotf.dir/build.make CMakeFiles/dotf.dir/build

[100%] Generating dotf.cpython-38-darwin.so

/Users/etijskens/.pyenv/versions/3.8.5/bin/f2py -m dotf -c --f90exec=/usr/local/bin/gfortran /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/dotf.f90 -DNPY_NO_DEPRECATED_API=NPY_1_7_API_VERSION -DF2PY_REPORT_ON_ARRAY_COPY=1 -DNDEBUG --opt='-O3' --build-dir /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build

running build

running config_cc

unifing config_cc, config, build_clib, build_ext, build commands --compiler options

running config_fc

unifing config_fc, config, build_clib, build_ext, build commands --fcompiler options

running build_src

build_src

building extension "dotf" sources

f2py options: []

f2py:> /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/dotfmodule.c

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8

Reading fortran codes...

Reading file '/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/dotf.f90' (format:free)

Post-processing...

Block: dotf

Block: dot

Post-processing (stage 2)...

Building modules...

Building module "dotf"...

Creating wrapper for Fortran function "dot"("dot")...

Constructing wrapper function "dot"...

dot = dot(a,b,[n])

Wrote C/API module "dotf" to file "/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/dotfmodule.c"

Fortran 77 wrappers are saved to "/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/dotf-f2pywrappers.f"

adding '/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/fortranobject.c' to sources.

adding '/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8' to include_dirs.

copying /Users/etijskens/.pyenv/versions/3.8.5/lib/python3.8/site-packages/numpy/f2py/src/fortranobject.c -> /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8

copying /Users/etijskens/.pyenv/versions/3.8.5/lib/python3.8/site-packages/numpy/f2py/src/fortranobject.h -> /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8

adding '/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/dotf-f2pywrappers.f' to sources.

build_src: building npy-pkg config files

running build_ext

customize UnixCCompiler

customize UnixCCompiler using build_ext

get_default_fcompiler: matching types: '['gnu95', 'nag', 'absoft', 'ibm', 'intel', 'gnu', 'g95', 'pg']'

customize Gnu95FCompiler

Found executable /usr/local/bin/gfortran

Found executable /usr/local/bin/gfortran

customize Gnu95FCompiler

customize Gnu95FCompiler using build_ext

building 'dotf' extension

compiling C sources

C compiler: clang -Wno-unused-result -Wsign-compare -Wunreachable-code -DNDEBUG -g -fwrapv -O3 -Wall -I/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX.sdk/usr/include -I/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX.sdk/usr/include

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev/workspace

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build

creating /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8

compile options: '-DNPY_NO_DEPRECATED_API=NPY_1_7_API_VERSION -DF2PY_REPORT_ON_ARRAY_COPY=1 -DNDEBUG -DNPY_DISABLE_OPTIMIZATION=1 -I/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8 -I/Users/etijskens/.pyenv/versions/3.8.5/lib/python3.8/site-packages/numpy/core/include -I/Users/etijskens/.pyenv/versions/3.8.5/include/python3.8 -c'

clang: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/dotfmodule.c

clang: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/fortranobject.c

/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/dotfmodule.c:144:12: warning: unused function 'f2py_size' [-Wunused-function]

static int f2py_size(PyArrayObject* var, ...)

^

1 warning generated.

compiling Fortran sources

Fortran f77 compiler: /usr/local/bin/gfortran -Wall -g -ffixed-form -fno-second-underscore -fPIC -O3

Fortran f90 compiler: /usr/local/bin/gfortran -Wall -g -fno-second-underscore -fPIC -O3

Fortran fix compiler: /usr/local/bin/gfortran -Wall -g -ffixed-form -fno-second-underscore -Wall -g -fno-second-underscore -fPIC -O3

compile options: '-DNPY_NO_DEPRECATED_API=NPY_1_7_API_VERSION -DF2PY_REPORT_ON_ARRAY_COPY=1 -DNDEBUG -I/Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8 -I/Users/etijskens/.pyenv/versions/3.8.5/lib/python3.8/site-packages/numpy/core/include -I/Users/etijskens/.pyenv/versions/3.8.5/include/python3.8 -c'

gfortran:f90: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/dotf.f90

gfortran:f77: /Users/etijskens/software/dev/workspace/et-micc2-tutorials-workspace-tmp/ET-dot/et_dot/dotf/_cmake_build/src.macosx-10.15-x86_64-3.8/dotf-f2pywrappers.f